QUIC’s (hidden) Super Powers

Most of QUIC’s killer features aren’t obvious or documented. Time to change that.

The Basics

Before we can cover the advanced stuff, we need to cover the basics.

You can still use TCP (or WebSockets) in [CURRENT_YEAR] but you’re missing out. QUIC is the new transport protocol on the block it’s going to slowly take over the internet.

QUIC

Imagine you’re a humble (and handsome) application developer like myself. You hear about this cool new protocol from this cool website with a cool domain name. Life is good, but you could always spice it up with some new technology.

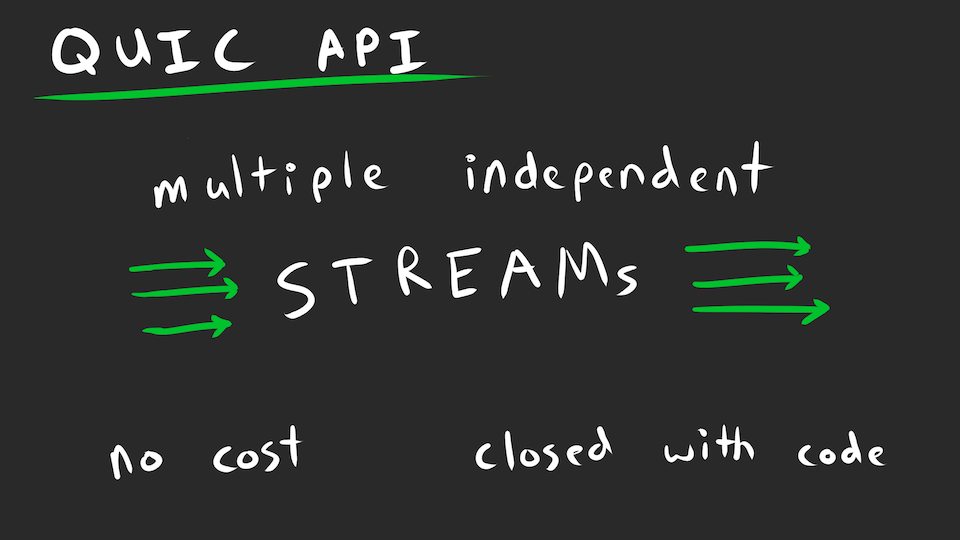

Before we can answer the WHY let’s cover the HOW. What is the QUIC API? How do I use it?

To oversimplify, QUIC gives you a byte stream like TCP. But unlike TCP, there’s no cost to open/close additional streams on either side.

Why is this useful?

- If you’ve ever used a connection pool, delete that shit and use QUIC.

- If you’ve ever multiplexed streams, delete that shit and use QUIC.

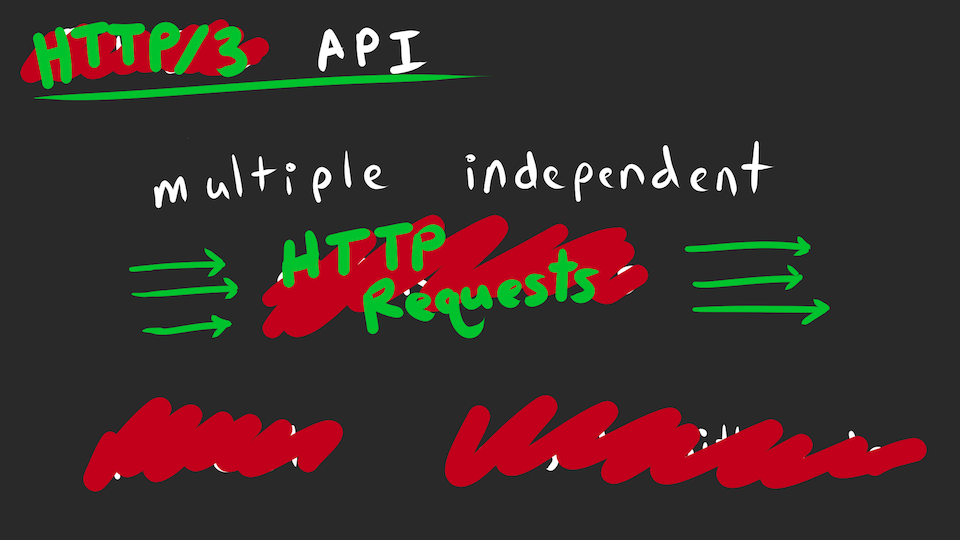

HTTP

And that’s exactly why QUIC was created. It turns out HTTP suffers from both of these issues depending on the version:

- HTTP/1 used a connection pool, often up to 6 connections per domain.

- HTTP/2 multiplexed requests, introducing head-of-line blocking.

Delete that shit and use HTTP/3.

However, as a humble (and handsome) application developer, I don’t care about HTTP/3.

The problem is that the HTTP version is transparent to my application. If you visit my website, you can use any version of HTTP and basically it all works the same. However, if issue a ton of requests in parallel in an attempt to utilize QUIC’s concurrency, then my application will absolutely choke on the older HTTP versions.

Don’t get me wrong, HTTP/3 might be an improvement, but it’s an incremental improvement. It’s not exciting unless you’re a CDN vendor for all the reasons I’ll mention below.

WebTransport

And frankly, it’s often just not worth fighting against HTTP semantics. If you want to make a web application that utilizes QUIC, then just use QUIC directly.

Fortunately we have WebTransport for that. It mostly exposes the QUIC API and is supported in Chrome/Edge/Firefox with Safari coming soon™.

You can think of WebTransport as a WebSocket replacement, but using QUIC instead of TCP.

The Advanced Shit

Anyway, time to get technical. Most of this won’t matter for your everyday humble (and handsome) application developer, but maybe you find something useful.

I figured out most of these while writing my own QUIC implementation. But don’t be me. Use an existing implementation instead. I’m now a Rust fanboye so I’m using Quinn with WebTransport. CloudFlare’s Quiche is also quite nice and interfaces with C/C++.

But you can read the RFCs if you’re mega bored on a plane. They’re extremely well-written and designed if you’re into that sort of thing.

yes i did just spend an hour of my rapidly depleting time left on this earth to trace a dead meme about the RFC numbers

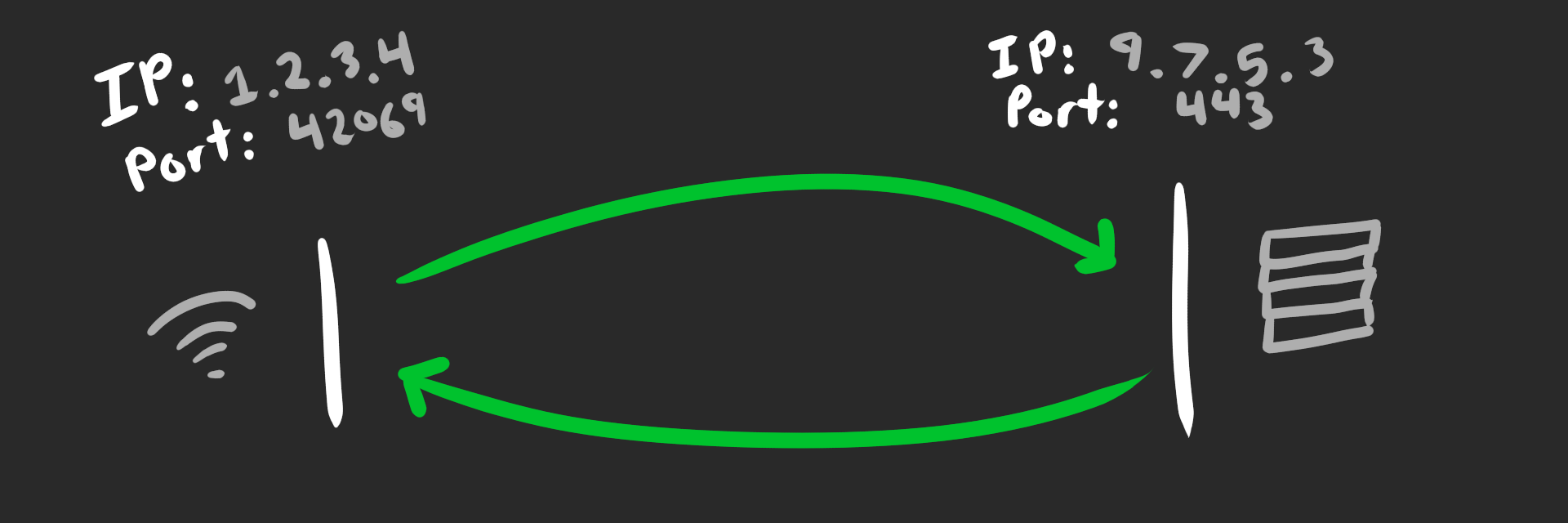

Connection ID

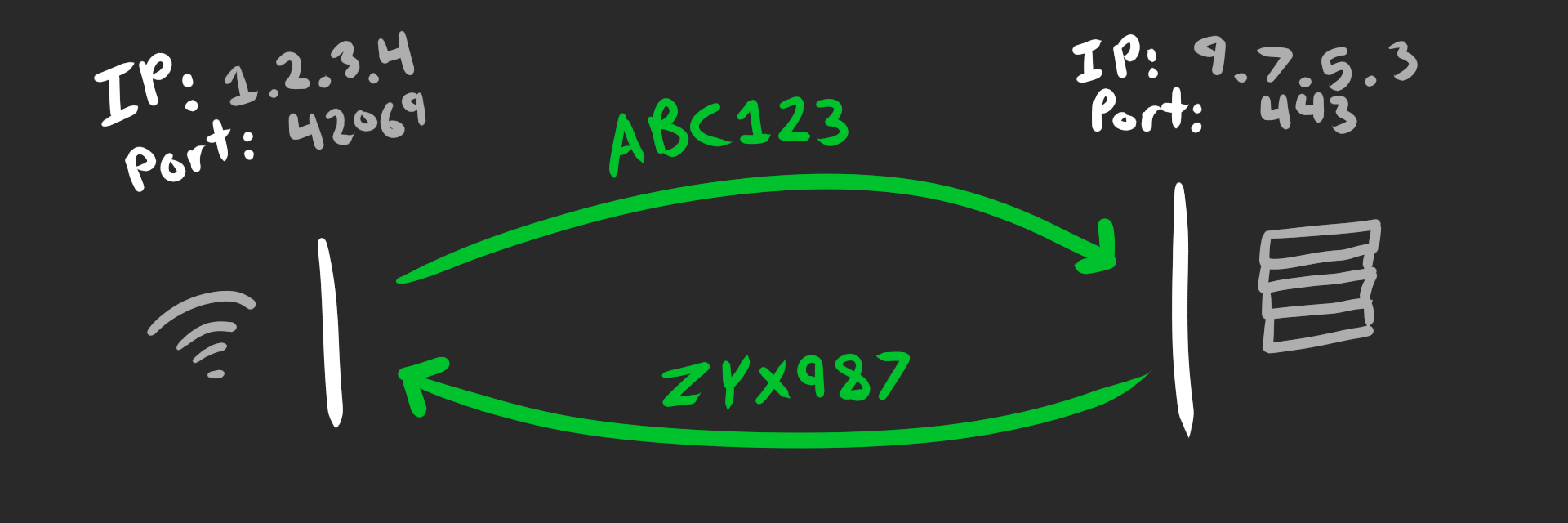

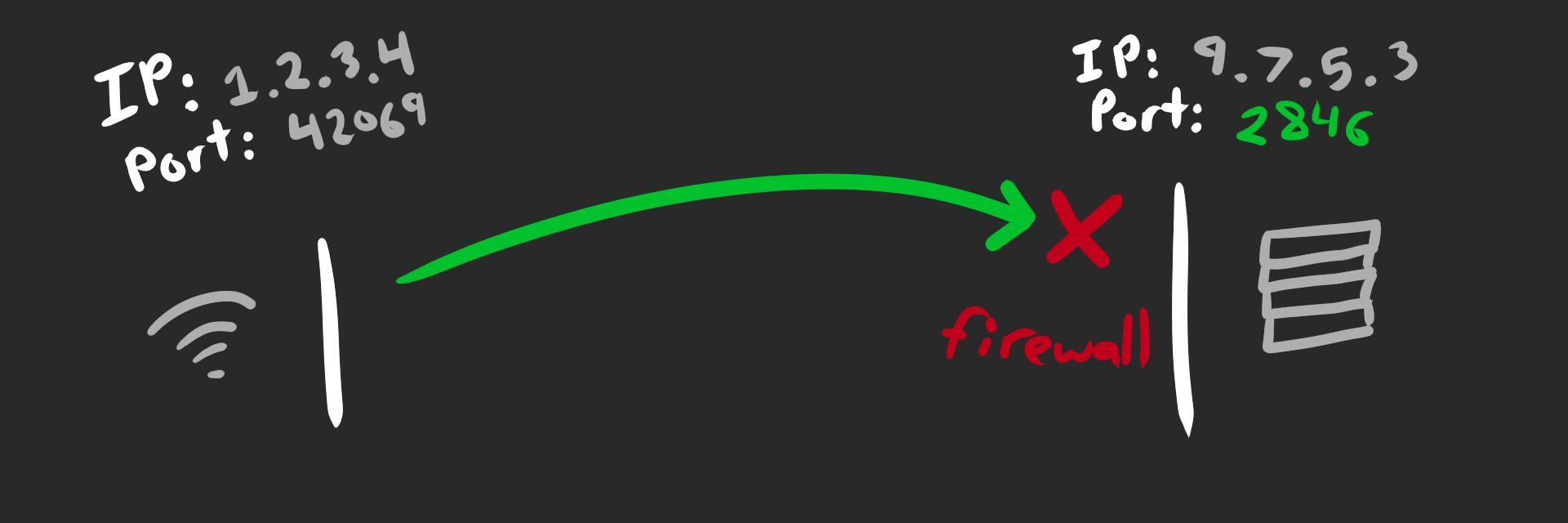

If you took a networking class, I’m sorry. But you might remember that TCP connections are identified by a 4-tuple. The kernel uses this information to map incoming packets to the correct socket/connection:

- source IP

- source port

- destination IP

- destination port

Each IP packet contains the source ip/port and destination ip/port, so you know who sent it and where to send the reply. Kinda like mail if anybody is old enough to remember that.

That’s gone with QUIC.

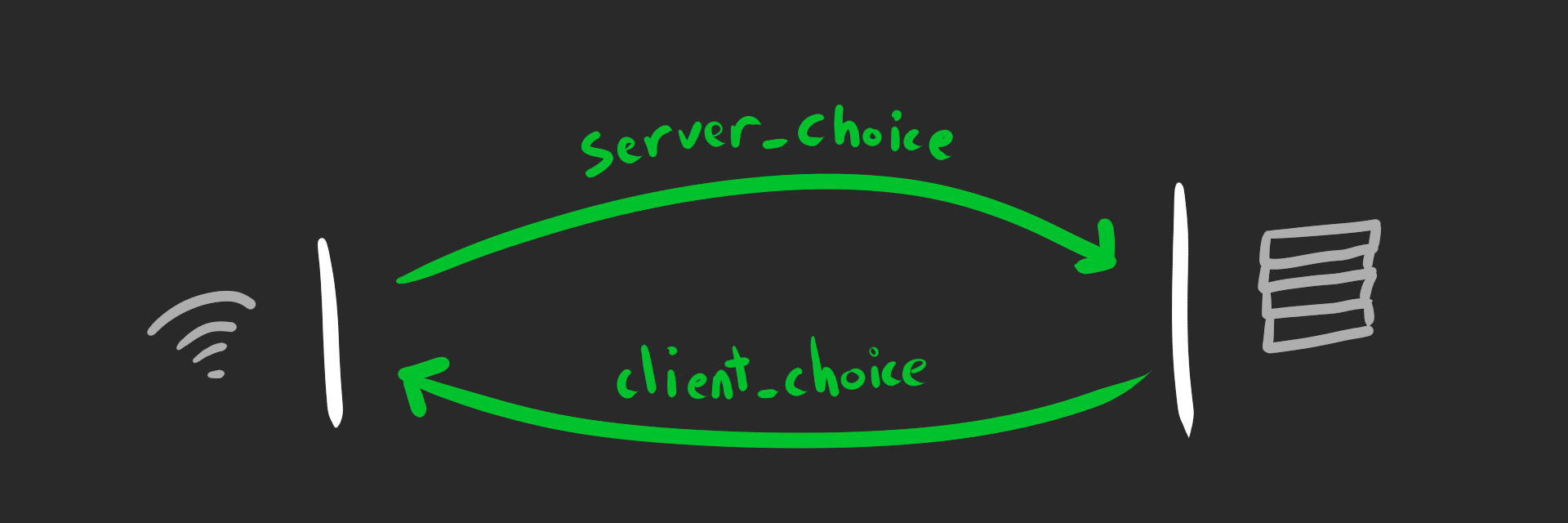

QUIC connections are instead identified by a Connection ID. This is an opaque blob of data, chosen by the receiver, sent in the header of every QUIC packet.

This might seem inconsequential at first, but it’s actually a huge deal.

Roaming

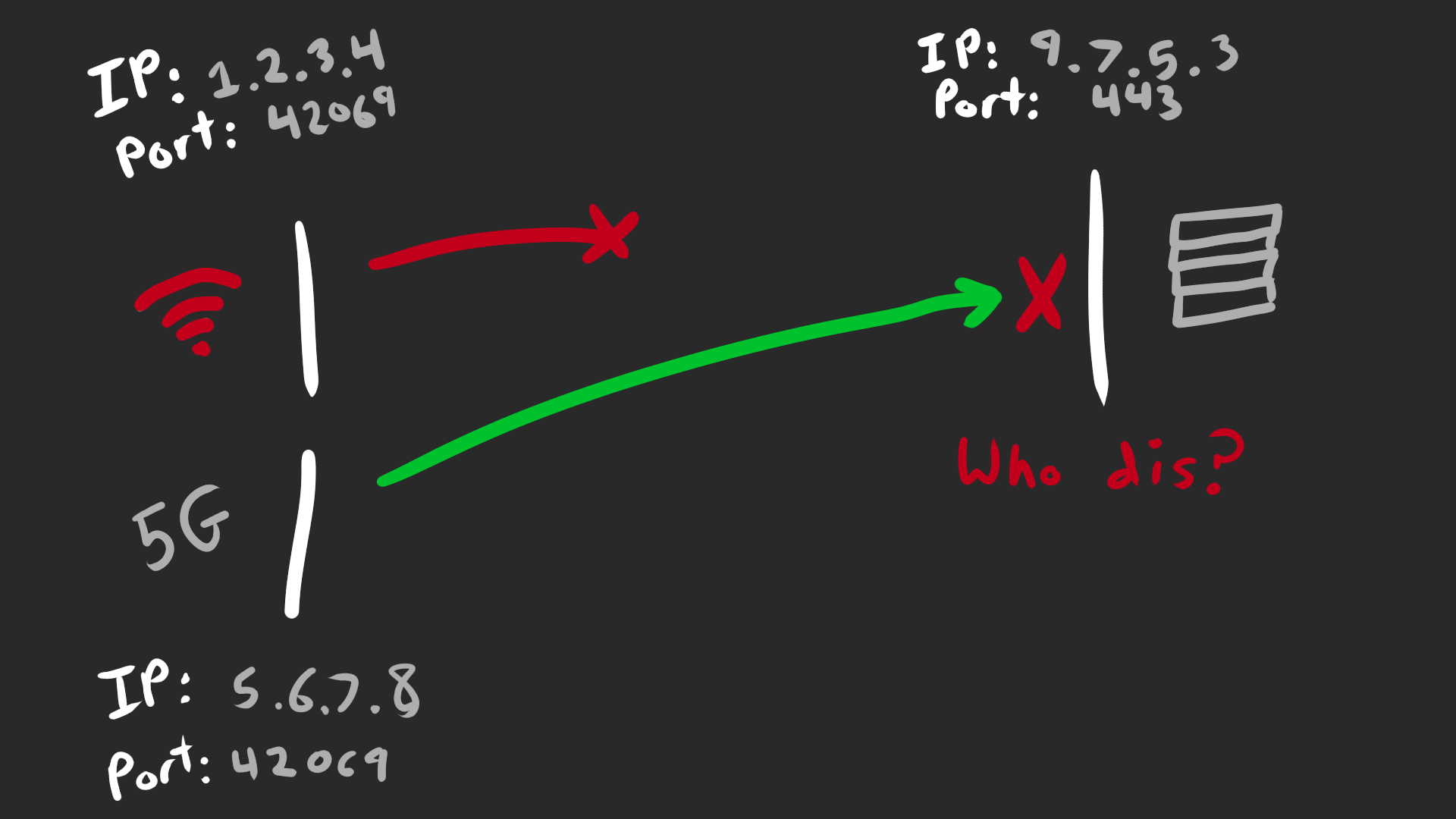

Ever switch between cellular and WiFi? Of course you have, you have a phone. When you switch networks, your source IP/port changes.

With TCP, this changes the 4-tuple. The server will silently discard any packets from the unknown source, severing the connection.

The application needs to detect this and retry, dialing a new connection and reissuing any pending requests. Retry logic is always a pain to get right and often users will just have to refresh manually.

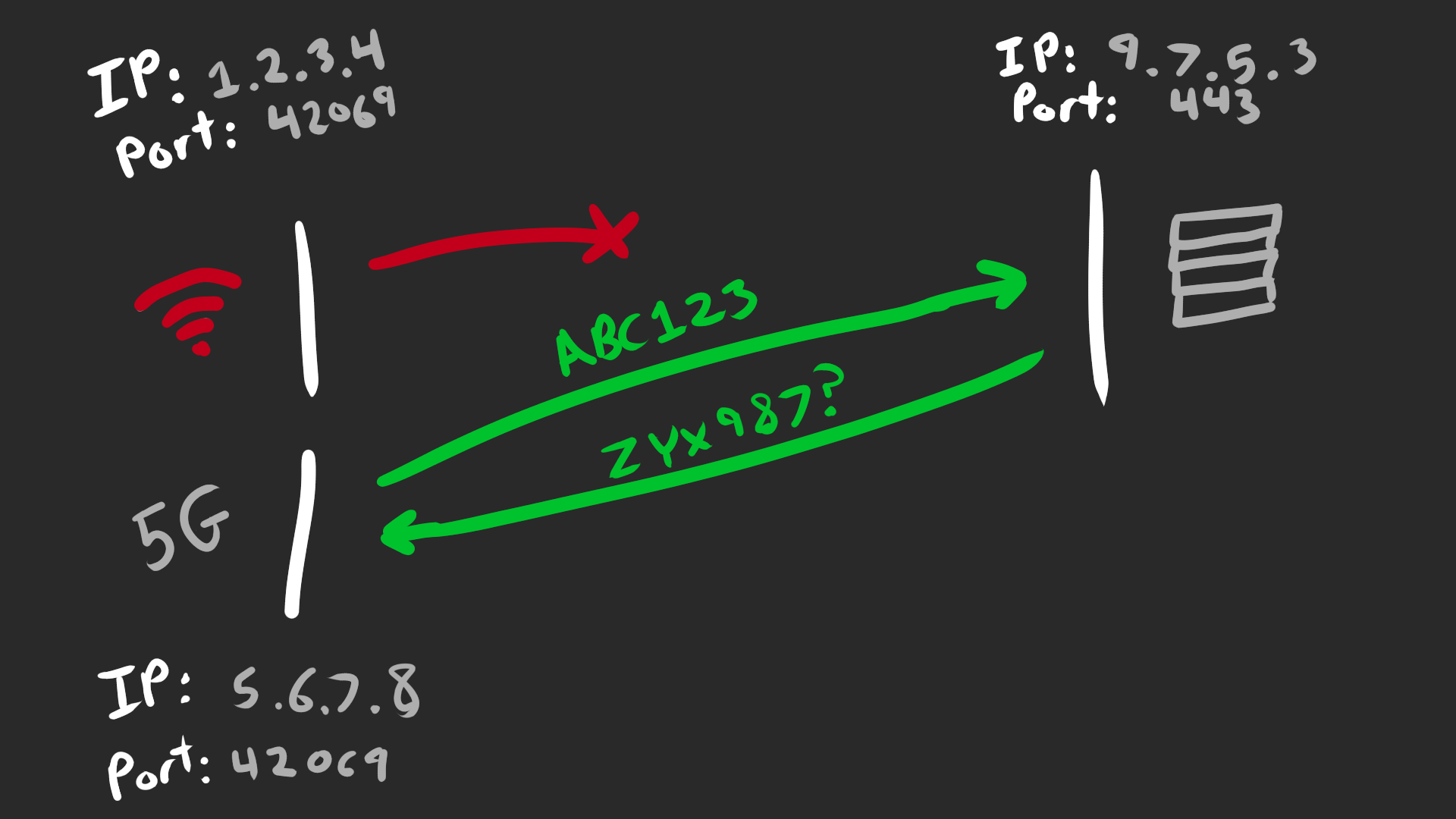

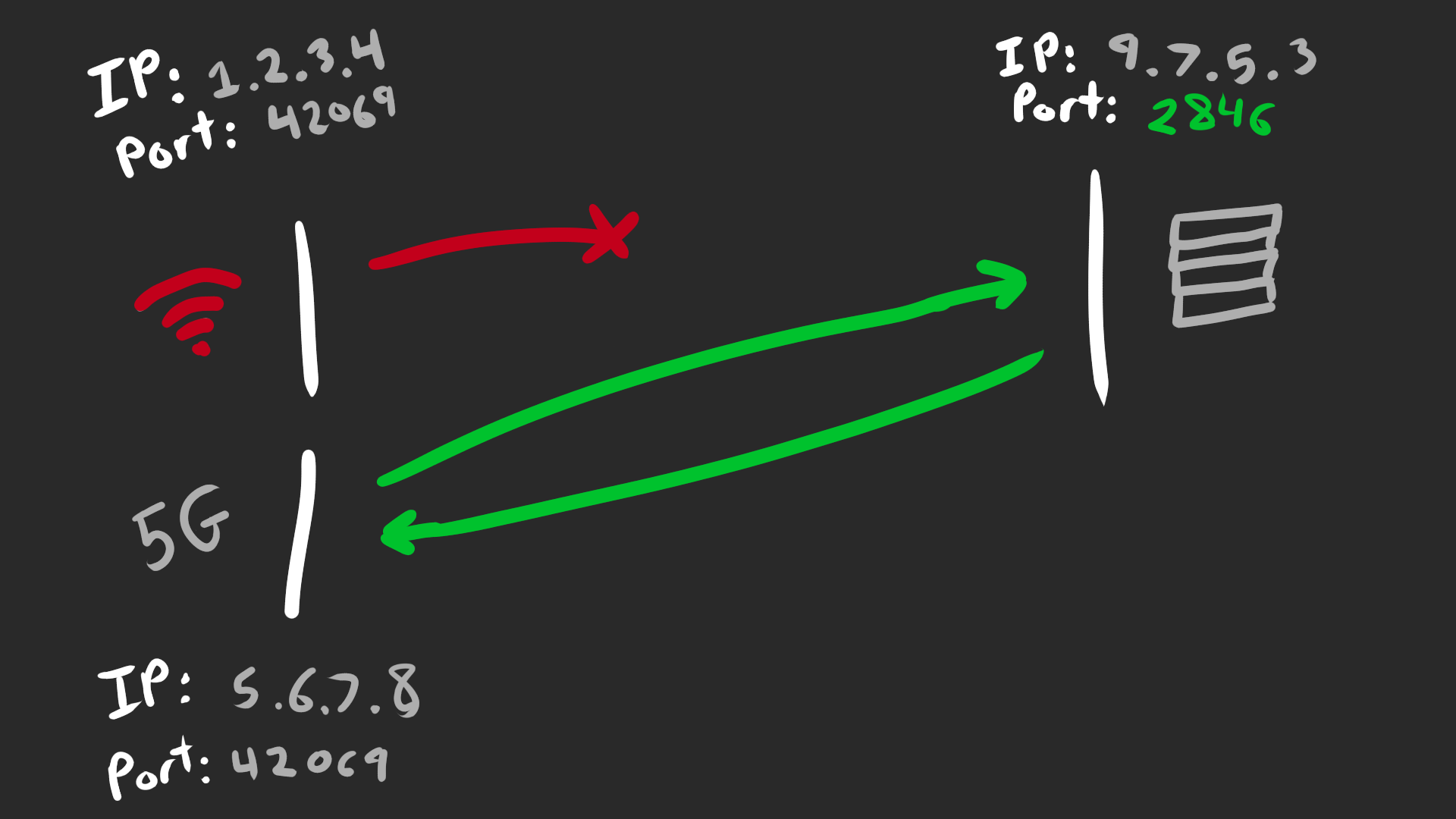

With QUIC, the source address will change but the Connection ID remains constant. The QUIC stack will transparently validate the new path (to prevent spoofing attacks) before switching to it. The application doesn’t need to do anything, it just works™

NAT Rebinding

In the above scenario, the client could know that its IP address changed when switching networks. Rather than wait for the connect to timeout, a good client can proactively reconnect.

However that’s not true with NATs. NATs suck. A NAT can transparently rebind your address without your knowledge, usually after an unspecified period of inactivity but sometimes just because they suck

In TCP land, a NAT rebinding is fatal, as the 4-tuple changes and packets will get silently discarded. The application has no idea that this happened and the connection appears to hang. It’s obnoxious and why you need some form of keep-alive to both detect and fend off any NAT rebinding.

In QUIC land, the Connection ID saves the day. It still takes 1 RTT for QUIC to verify the new path but it just works™

Firewalls

I’ve been focusing on TCP quite a lot to simplify the narrative, but now it’s time to pick on WebRTC instead. It’s complicated, but WebRTC actually identifies connections based on the 2-tuple:

- destination IP

- destination port

This means the source IP/port can change without severing the connection: ez roaming and NAT rebinding support!

The chad 2-tuple doesn’t care about the source address. The unique destination port tells us the connection.

But why doesn’t QUIC do this instead? Two terrifying words: corporate firewalls.

The server needs to open a unique port for each connection. However, corporate firewalls often block all but a handful of ports for “security reasons”. At one point I probed the Twitch corporate network and found that only around ~30 UDP ports were open; everything else was blocked.

The beta 2-tuple failed to connect, oops. Turns out the port was too unique for the firewall.

QUIC instead uses udp/443 for all connections.

This is huge because firewalls will often allow port 443 for HTTPS but block almost everything else.

If you use QUIC, you automatically leverage this firewall hole punching.

Note that you can (and I have) hack a WebRTC server to listen on udp/443 instead.

However, just like TCP, you lose roaming support because you rely on the 4-tuple to identify connections.

Fun fact: a QUIC client can also use the same IP and port for all outgoing connections. There’s no need for ephemeral ports!

Load Balancing

When you use internet, you’re almost never connecting to a single server. There’s secretly a fleet of servers behind a fleet of load balancers, distributing the load.

You can’t see them, but they’re watching. Waiting.

Connection ID

Like I mentioned earlier, the Connection ID is an opaque blob of data chosen by the receiver. The receiver can use multiple IDs, issuing or retiring them at will, and the length is unbounded.

This is huge. Your friendly neighborhood server admin is salivating.

Since a server choose it’s own connection ID, it can encode session information into it. This can include routing information, echoed by the client in the header of each packet. Basically you can encode whatever load balancing information you want.

There’s an entire draft dedicated to cool shit you could do with this. Here’s a free idea: encode the name of the backend server into the Connection ID. Now you have sticky sessions without a lookup table!

That being said:

- Overhead matters: Don’t hog a 1.2KB QUIC packet with a 1KB connection ID.

- Security matters: The connection ID is the only plaintext data so just make sure it’s encrypted/unguessable.

But there’s a lot of creative stuff you can do!

Anycast

OH BOY. This one is extremely well-hidden but extremely powerful.

During the handshake, the server can reply with an optional and kinda obscure preferred_address. The client will try to send packets to this address instead, after validating it of course. Why on earth does this matter?

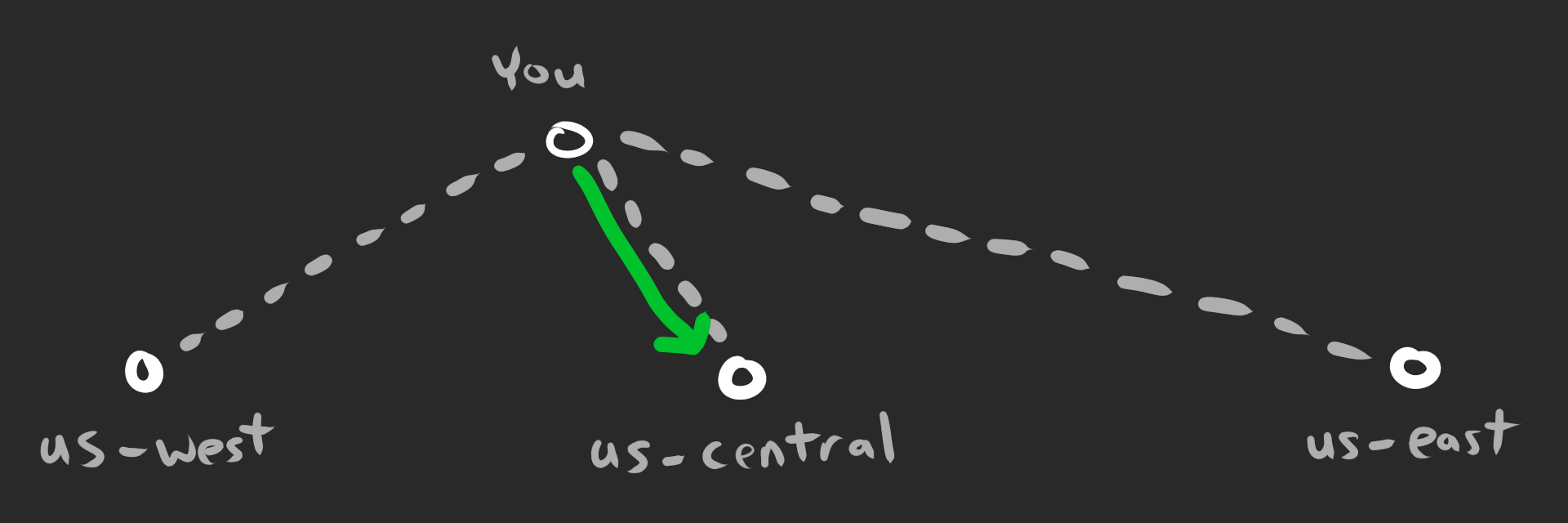

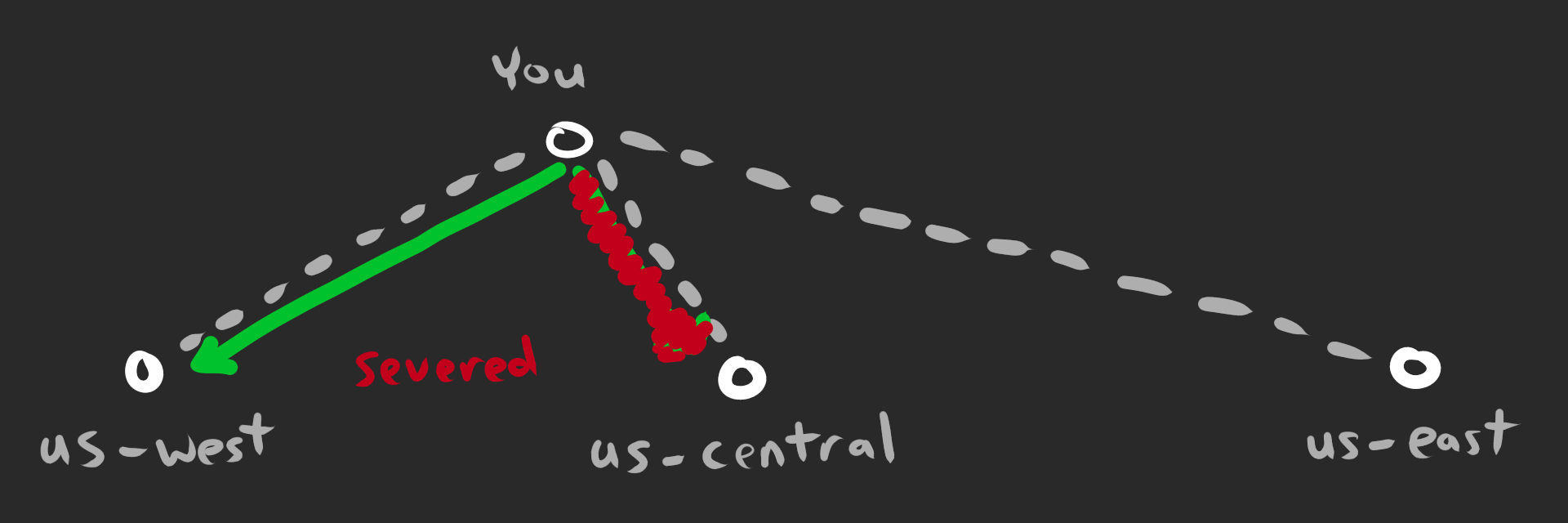

So there’s this thing called anycast. Anycast allows multiple servers to advertise the same IP address and any packets will get routed to the “closest” server (based on BGP). This is amazing for global load balancing since it also gives network administrators control over routing. The path to the closest server might be congested, so ISPs can choose a different “closest” server instead.

However, if the “closest” server changes for any reason (flapping), unfortunately a different server will receive your packets. Even with QUIC, the new server will discard these unknown packets and the connection is severed.

Anycast is usually reserved for UDP-protocols like DNS because of this flapping issue. It’s usually better to use unicast with TCP to ensure consistent routing.

However, what if you could use anycast for discovery and then switch to unicast for consistency?

That’s exactly what preferred_address is for.

Every server can advertise a shared anycast address used for the TLS handshake only. Afterwards, the clients are told to switch to the server’s unique unicast address. The end result is a stable connection to the closest server, transparent to the application!

There are other ways of implementing this today, but they kind of suck. GeoDNS is quite inaccurate since it (usually) involves a crude IP lookup database. An application can use something like Happy Eyeballs to try multiple addresses in parallel, but it’s expensive and racey.

My bold prediction is that QUIC’s anycast handshake will take over once it’s widely deployed.

Privacy

QUIC takes a paranoid approach to privacy. TLS encryption is required and even packet headers are encrypted to piss off middleboxes.

What are middleboxes? Well it’s a fancy word for routers, the boxes that figure out how to get IP packets from point A to point B. The problem is that these middleboxes can inspect and potentially modify packets. A middlebox with evil intentions can monitor traffic, throttle traffic, or even inject ads.

The QUIC solution is to encrypt everything*. Even the packet number is encrypted because fuck middleboxes. But please note that this is not perfect:

- The QUIC handshake uses a hard-coded initial secret. A middlebox can decrypt this traffic to determine the server name unless you’re using ESNI.

- The QUIC connection ID will leak information about the number of connections and their activity. MASQUE allows you to nest multiple QUIC connections, effectively operating as a VPN.

And just to pick on WebRTC some more; the SRTP header (including any extensions) are not encrypted. A middlebox can easily inspect the header, determine the type of WebRTC traffic and even figure out which participants are currently talking. And of course, WebRTC is notorious for leaking your IP address.

Attacks

UDP protocols are generally quite vulnerable to attacks, often amplifying them by accident. TCP SYN floods are a common DDoS vector too. QUIC is not immune to attacks either but there are some neat mitigation techniques.

Of course, you should know by now that Connection ID is amazing. A QUIC server can sign the Connection ID to prevent the client tampering or spoofing it.

Why does this matter? Well, now a cooperating router (L3) can drop abusive packets as they enter the network. Otherwise, the packet would have to reach the QUIC server (L7) before it could be processed. This can be done in hardware for maximum efficiency especially since it requires no state.

In fact, the router could even send a stateless reset to close a connection if it detects abuse. The server chooses the reset token during the handshake, and if it’s deterministic then the router can compute it too.

There’s a world of creative architectures that are just waiting to be explored. I’m sure the folks at Google and CloudFlare are working on some cool shit right now.

Congestion Control

QUIC’s congestion control is modeled after TCP but there’s some important differences to call out:

- QUIC packets have unique numbers so the receiver can distinguish between retransmissions.

- QUIC ACKs include unbounded* ranges so the receiver can more accurately report individual losses.

- QUIC ACKs include the batching delay so the receiver has accurate RTT measurements.

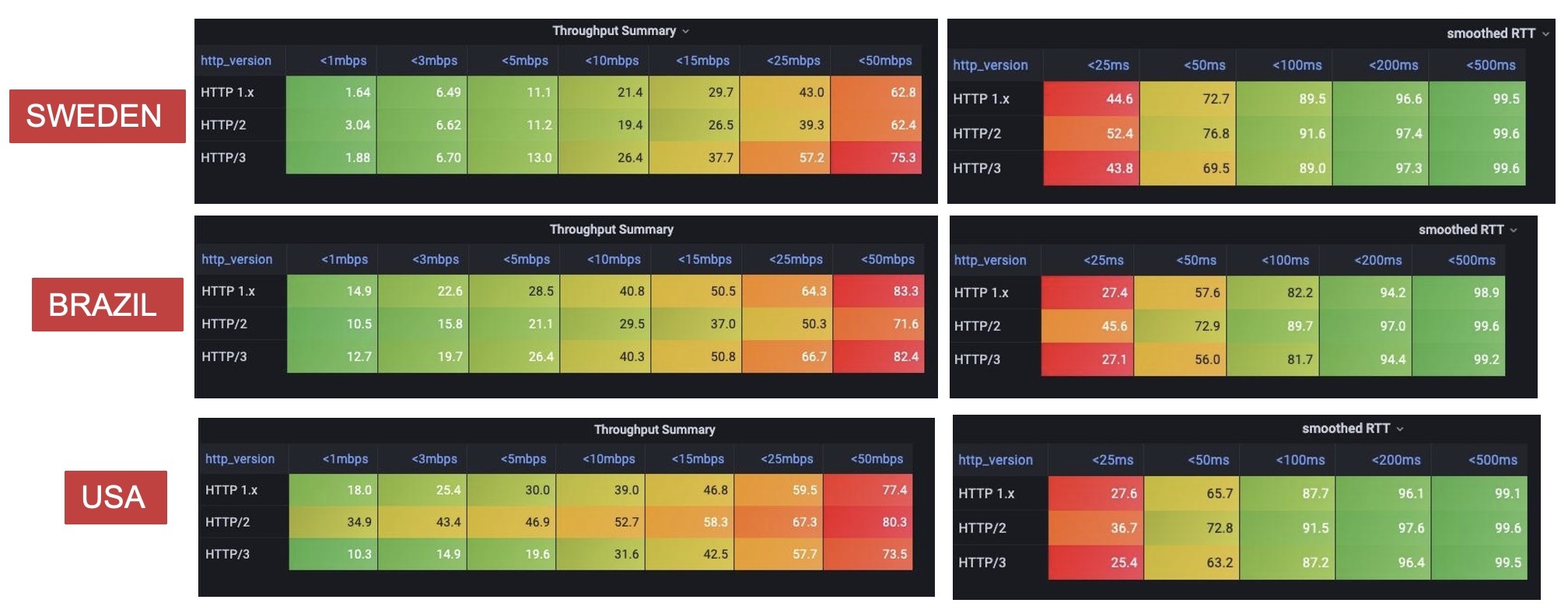

Does this make a noticeable difference? Not really, but QUIC’s congestion control should be marginally better. QUIC implementations are still being tuned and optimized so take these initial results with a grain of salt:

Fun fact: QUIC ACKs are themselves acknowledged, so you effectively ACK an ACK. Subsequent ACKs don’t include the ACK’d ACK packet numbers, saving bandwidth!

Deployable

However, there is an MAJOR difference between TCP and QUIC congestion control. TCP is implemented in the kernel which means it’s difficult or impossible to modify.

- A Windows client is stuck with the crappy TCP implementation in Windows.

- An OSX client is stuck with the crappy TCP implementation in OSX.

- An Android client is stuck with the crappy TCP implementation in Android.

- An iOS client is stuck with the crappy TCP implementation in iOS.

- A Linux client is stuck with the crappy TCP implementation in Linux.

You get the point. The default TCP congestion control for every OS (as far as I can tell) is loss-based and suffers from bufferbloat, making it poor for latency sensitive applications. Note that you can configure or install a custom kernel to change TCP’s behavior, but that’s primarily for power users or server operators.

QUIC, on the other hand, is not implemented in the kernel (yet). When you ship your client, you ship your own, vendored QUIC implementation. This means your application can better congestion control algorithms (ex. BBR) which has been impossible until now.

In fact, you can even experiment with your own congestion control algorithms. I’ve made some improvements to BBR to better support application-limited traffic like live video. It’s also significantly easier to run experiments in userspace.

Datagrams

Datagrams are basically UDP packets. They are unreliable, unordered, and have a maximum size (at least 1.2KB).

QUIC supports datagrams via an extension. This extension is required as part of WebTransport, which means datagram support in the browser!

However, there are some caveats:

- Datagrams are congestion controlled. QUIC datagrams are acknowledged behind the scenes solely to compute the max send rate. An application can’t implement it’s own congestion control since it’s throttled by the QUIC congestion control.

- Datagrams are acknowledged but not exposed. An application has to implement it’s own reliability mechanism instead, so the QUIC ACKs are mostly wasted.

- Datagrams can’t be sent to arbitrary destinations. If you want to packets to multiple ports, then you have to establish separate QUIC connections.

- Datagrams may be coalesced into a single QUIC packet. This is great for efficiency because it means fewer packets sent. However, it means an application can’t rely on datagrams actually being independent, which is kind of the point.

HOT TAKE ALERT: never use QUIC datagrams. They have all the downsides of UDP, none of the benefits, and throw some foot-guns into the mix. They suck.

You should use QUIC streams instead. Make a QUIC stream for each logical unit (ex. video frame) and prioritize/close them as needed. You get fragmentation, ordering, reliability, flow control, etc for free and never have to think about MTUs again.

Media over QUIC

I know this is positive blog post but I want to dunk on QUIC datagrams a bit more.

The usage of datagrams is actually a core difference between the Media over QUIC and the RTP over QUIC efforts. Both are trying to improve WebRTC, but I’m on team “make a brand new protocol”.

All of the reasons above actually prevent implementing RTP naively by using QUIC datagrams instead of UDP:

- Datagrams are congestion controlled. It means you can’t implement GCC or SCReAM on top of QUIC datagrams.

- Datagrams are acknowledged but you can’t use them. You send up sending both ACKs and NACKs in different layers, increasing overhead and hurting performance.

- Datagrams can’t be sent to arbitrary destinations. It means you can’t send RTP and RTCP to different ports; they have to be muxed. (not a big deal)

- Datagrams may be coalesced into a single QUIC packet. It makes it more difficult to implement FEC, since QUIC datagrams may be secretly coalesced.

This is just an example for RTP but same is probably true for your favorite UDP-based protocol. Use QUIC streams instead.

NOTE: Media over QUIC will likely support datagrams, primarily for experimentation. I’ve already stated my opinion but unfortunately, I’m not the boss of the IETF.

STREAM_FIN

Written by @kixelated.

I’m super exited about QUIC and WebTransport. There’s never been a better time to be a transport protocol nerd.

On a personal note, I’m now gainfully employed.

That means I’m getting paid to actually make useful stuff instead of writing informative blog posts.

Unfortunate for you, but fortunate for me since I get health insurance now (thanks America).

I won’t be able to devote as much time cheer-leading for Media over QUIC but the standard is still full steam ahead. Remember: it’s co-authored by individuals from Google, Meta, Cisco, Akamai, along with IETF as a whole.

Join the discord server though.